Machine learning, artificial intelligence, deep learning, synthetic data - terms that can definitely be classified as buzzwords.

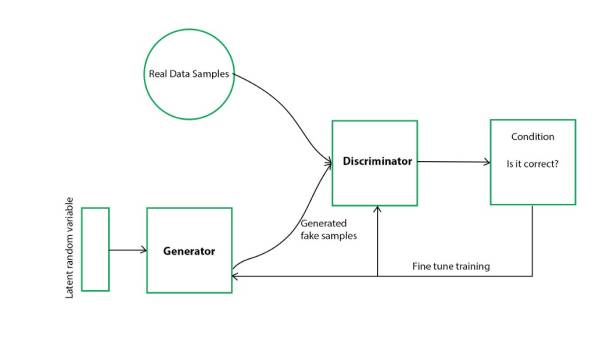

During the last year we have accompanied the startup Smartest Learning in the development of their app. The Smartest app is based on the idea of turning learning material into interactive practice tests quickly and easily. All you have to do is scan the material via the app and Smartest automatically creates different tests or flashcards. This is made possible by a combination of computer vision and natural language processing - both sub-areas of machine learning. In this specific case, proven algorithms and data sets are used. But what if the basic data has to be collected first and data models have to be created first? How do you manage to ensure data quality?

Sounds like complex questions - and the subject matter is also anything but trivial. But since we think it is an important topic, we would like to share our knowledge as simply and understandably as possible, and thus share our enthusiasm for it.

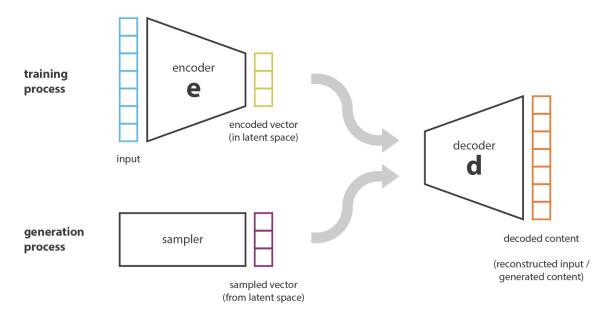

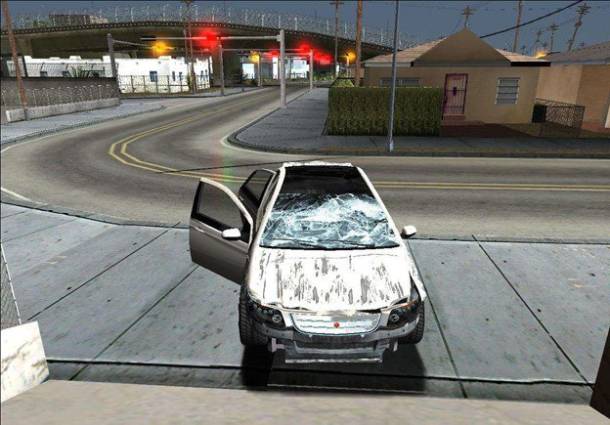

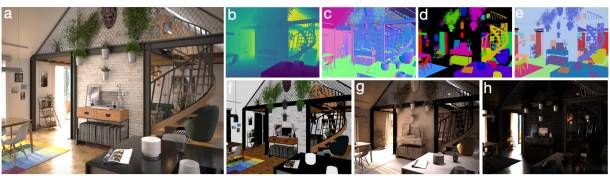

Fortunately, we have a specialist in our own ranks whom we let have his say. Pascal is not 'only' our blockchain consultant, but also an expert when it comes to machine learning. With DAITA Technologies, he has founded a company that specializes in the processing of data for artificial intelligence.

Let's hear what Pascal has to say about machine learning and synthetic data!