Hurdles on the way to the open world app

Current AI services such as ChatGPT already show what dialogue interfaces feel like. However, open-world systems will only become truly useful when they are deeply connected to the operating system, personal data, vehicles, smart homes and profiles. Whether we want this ubiquitous networking remains to be seen - but it is probably only a matter of time before the technology matures and social acceptance follows suit.

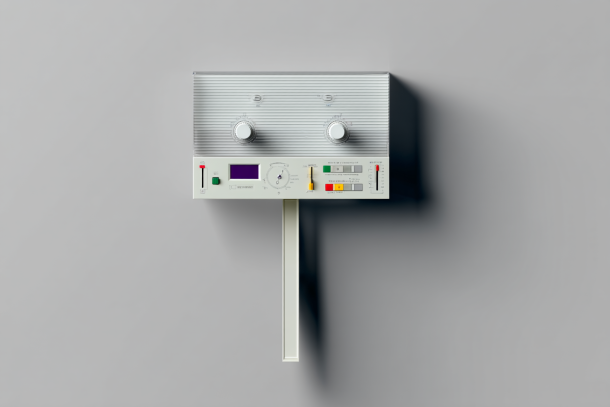

UX and trust

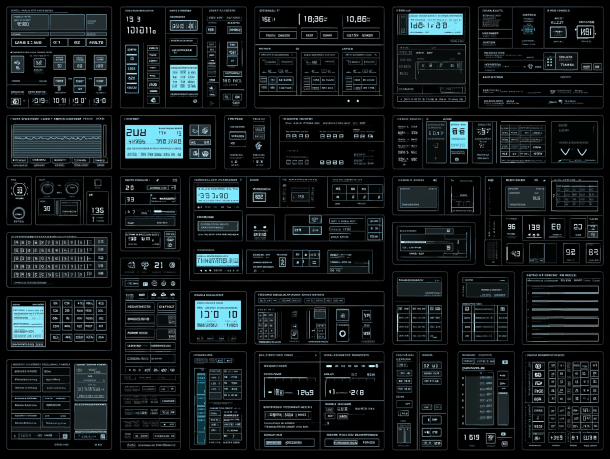

An open system is only useful if it remains simple. AI dialogues must be intuitive and error-tolerant. Unexpected results must not be frustrating. Transparency helps: Users can see what data the AI is using and can customise outputs. This creates trust - precisely because a lot happens in the background.

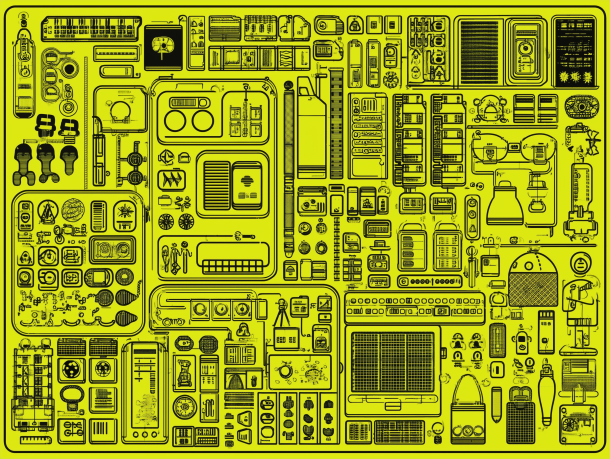

Misinterpretation and ambiguity

Natural language is often vague. "Make me a note app like Post-it" - simple text list or colourful sticky notes with alarm? AI understands context, but doesn't guess every detail. A clarifying dialogue mode ("Should the notes remind you?") intercepts misunderstandings. This requires careful interaction design, otherwise the dialogue becomes tedious.

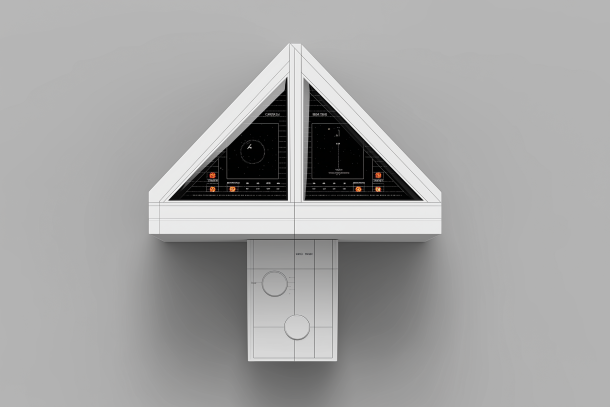

Modularity and technology

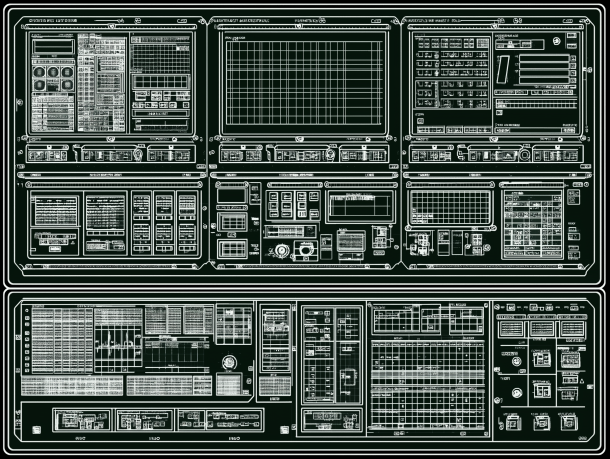

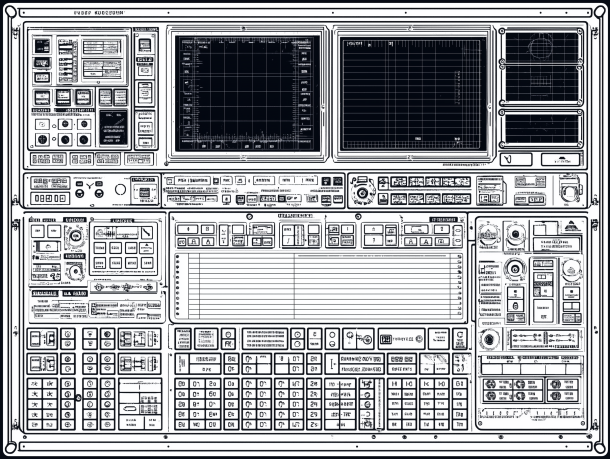

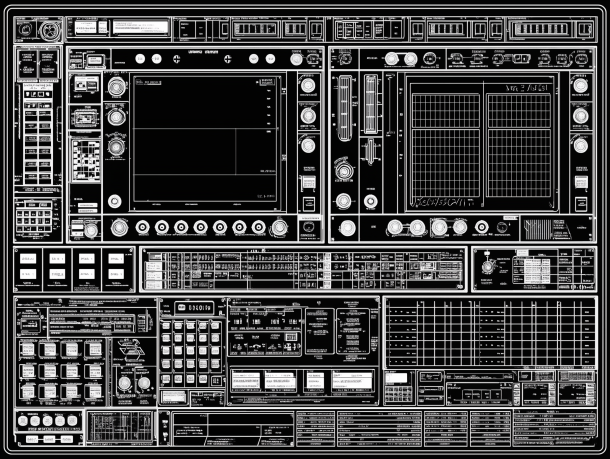

A real open-world platform needs standardised building blocks - camera, data input, diagram, email, etc. The AI puts them together like Lego building blocks. The challenge: covering countless combinations with a limited number of modules or generating modules dynamically. Both are complex and require clear interfaces.

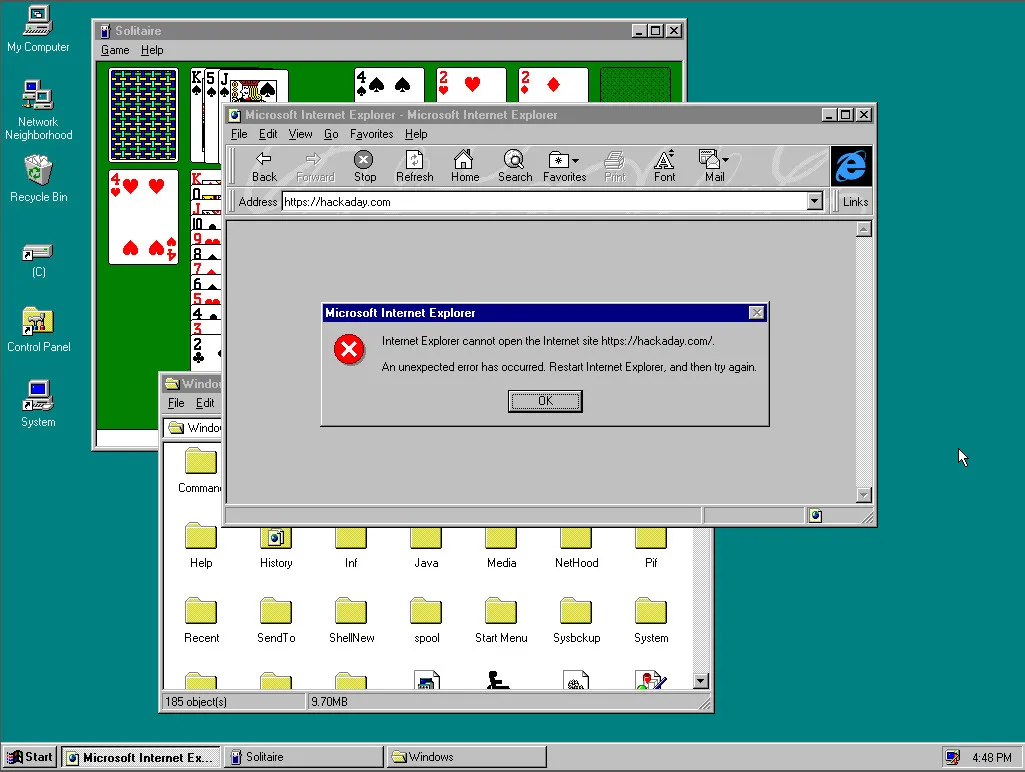

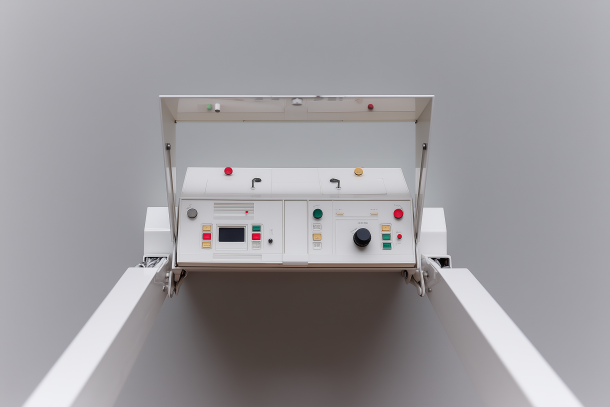

Quality assurance and troubleshooting

User-generated functions can contain errors - unclear description, AI dropouts. Who fixes bugs if there are no professional developers behind them? The platform must offer automatic tests, error messages and self-healing mechanisms so that problems are quickly recognised and corrected.

Acceptance and learning curve

For many, open world means a paradigm shift: instead of downloading ready-made apps, they formulate their wishes themselves. This requires guidance and a suggestion system that helps with the formulation. Only when assistants, device data, vehicles, home control and profiles are seamlessly linked will the concept become truly suitable for everyday use - and social acceptance can grow.

Safety & ethics

The more open the platform, the greater the risk of misuse. The AI must not disclose sensitive data, make discriminatory suggestions or hallucinate incorrect instructions. Clear guidelines (e.g. in accordance with the EU AI Act), content filters and human control instances are necessary to ensure long-term trust.

One particularly critical issue here is prompt injection - i.e. the targeted subversion of AI instructions through manipulated input. This article by Arun Nair impressively shows how quickly a system can be misled if security mechanisms are missing.

Such attack vectors show how important content filters, robust context delimitation and clear model governance are. Design must also share responsibility here - for example through smart interface boundaries, feedback mechanisms and comprehensible dialogue processes.